13 Appendix: Enough Python for this Book

This appendex introduces the Python programming language, and it is intended for readers who are new to programming in Python. Unlike other introductions to Python, this presentation focuses on linguistically-motivated tasks.

We'll address the following questions:

- How can you get started with Python, and use the interpreter and editor?

- How do the fundamental building blocks of Python work, such as loops, functions and assignment?

- How can you combine these building blocks in order to perform more complex tasks?

This chapter contains many examples and exercises. If you are new to programming, we encourage you to work through them carefully and consult other introductions to programming if necessary.

The Python website provides extensive documentation, including introductory tutorials and links to other resources. Please see http://docs.python.org/. When using the online Python documentation, be aware that your installed version might be different from the version of the documentation you are reading. You can easily check what version you have, with import sys; sys.version, and then consult version-specific documentation on the Python website.

13.1 Getting Started

One of the friendly things about Python is that it allows you to type directly into the interpreter — the system that will be running your Python programs. You can access the Python interpreter using a simple graphical interface called the Interactive Development Environment (IDLE). On a Mac you can find this under Applications→MacPython, and on Windows under All Programs→Python. Under Unix you can run Python from the shell by typing idle (if this is not installed, try typing python). The interpreter will print a blurb about your Python version; simply check that you are running Python 3.2 or later (here it is version 3.4.2):

|

Note

If you are unable to run the Python interpreter, you probably don't have Python installed correctly. Please visit http://python.org/ for detailed instructions. NLTK 3.0 works for Python 2.6, 2.7 and 3.2 onwards. If you are using Python 2.6 or 2.7, you need to type: from __future__ import division, print_function at the start of your session, in order for our examples to work.

The >>> prompt indicates that the Python interpreter is now waiting for input. When copying examples from this book, don't type the ">>>" yourself. Now, let's begin by using Python as a calculator:

|

Once the interpreter has displayed the answer, the prompt reappears. This means the Python interpreter is waiting for you to give another instruction.

Note

Your Turn: Enter a few more expressions of your own. You can use asterisk (*) for multiplication and slash (/) for division, and parentheses for bracketing expressions.

The preceding examples demonstrate how you can work interactively with the Python interpreter, experimenting with various expressions in the language to see what they do. Now let's try a nonsensical expression to see how the interpreter handles it:

|

This produced a syntax error. In Python, it doesn't make sense to end an instruction with a plus sign. The Python interpreter indicates the line where the problem occurred (line 1 of <stdin>, which stands for "standard input").

Now that we can use the Python interpreter we're ready to start learning Python, and applying it to some language data.

13.2 Texts as Lists of Words

What is a text? At one level, it is a sequence of symbols on a page such as this one. At another level, it is a sequence of chapters, made up of a sequence of sections, where each section is a sequence of paragraphs, and so on. Programmers may think of a text as a sequence of characters in a file. However, for our purposes, a text is a sequence of words and punctuation. To get started with texts, you first need to understand the "list" data type.

Lists

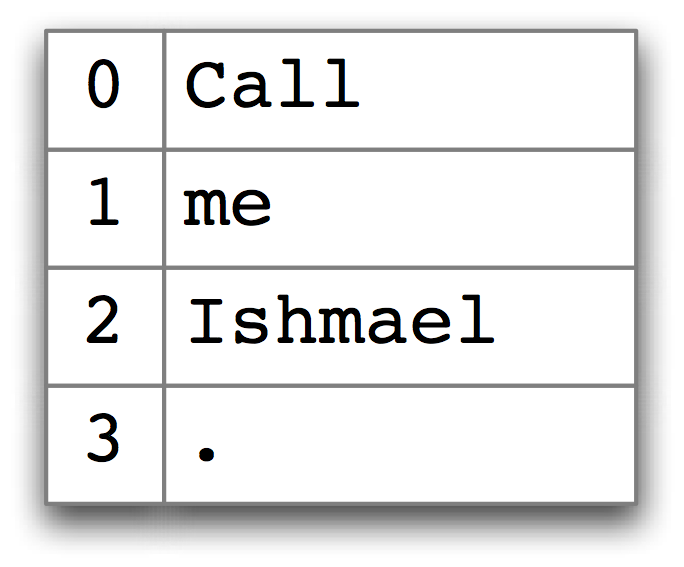

Here's how we will represent a text in Python:

|

After the prompt we've given a name we made up, sent, short for

"sentence," followed

by the equals sign, and then some quoted words, separated with

commas, and surrounded with brackets. This bracketed material

is known as a list in Python.

We can inspect it by typing the name ![[1]](callouts/callout1.gif) . We can ask for its

length using the built-in len function

. We can ask for its

length using the built-in len function ![[2]](callouts/callout2.gif) .

.

|

The expression len(sent) means that we are 'calling' a function len with an argument sent. When we don't know, or don't care, about the particular argument, we'll write len() to refer to the function. We'll look more at functions, and how you can define your own, in 13.3.

Note

Your Turn: Make up a few sentences of your own, by typing a name, equals sign, and a list of words, like this: ex1 = ['Monty', 'Python', 'and', 'the', 'Holy', 'Grail'].

Once you're comfortable defining little texts like this, let's try another built-in function:

|

So sorted() will take a list as argument and give us back the sorted version of the list. You might wonder why '?' occurs first in the sequence. In fact it it is standard for computers to sort punctuation and uppercase letters before lowercase letters. This is due to the computer's underlying numerical representation of text, which we can see by asking Python to tell us the ordinal value of a character:

|

Note

Your Turn: Try sorting the words in one of the sentences that you made up.

A pleasant surprise is that we can use Python's addition operator on lists. Adding two lists creates a new list with everything from the first list, followed by everything from the second list:

|

This special use of the addition operation is called concatenation; it combines the lists together into a single list. We can concatenate sentences to build up a text.

We don't have to type out the lists each time; we can name them, then refer back to them using those names:

|

We can count the number of times a word occurs in a list. To do this, we specify the name of the list, followed by a period, then the method name count(), followed by the word in parentheses:

|

Methods in Python work pretty much like functions in that they are applied to arguments; we'll say more about them, and the notation that's used, later in this chapter.

We can also append an item to a list. When we use append(), no result is printed, but the list is modified and we can inspect it in the usual way.

|

Note

Your Turn: Before continuing, experiment with your own example sentences and make sure you can use Python's general-purpose functions len() and sorted(), and the special list methods count() and append().

Indexing Lists

As we have seen, a text in Python is a list of words, represented using a combination of brackets and quotes. We can use Python to perform various operations on a text, such as getting its length, or counting the number of times a certain word appears.

Each item of a list has a position, or index, starting from zero. Here are the indexes for our example sentence:

| What | is | the | airspeed | of | an | unladen | swallow | ? | 0 1 2 3 4 5 6 7 8 9

We look up the items of a list by index as follows:

|

We can do the converse; given a word, find the index of its first occurrence:

|

Notice that our indexes start from zero: sent element zero, i.e., sent[0], is the first word What, and sent[7] is swallow. The reason is simple: the moment Python accesses the content of a list from the computer's memory, it is already at the first element; we have to tell it how many elements forward to go to access a given item. Thus, zero steps forward gives us the first element.

Note

This practice of counting from zero is initially confusing, but typical of modern programming languages. You'll quickly get the hang of it if you've mastered the system of counting centuries where 19XY is a year in the 20th century, or if you live in a country where the floors of a building are numbered from 1, and so walking up n-1 flights of stairs takes you to level n.

If we accidentally use an index that is too large, we get an error:

|

This time it is not a syntax error because the program fragment is syntactically correct. Instead, it is a runtime error, and it produces a message that shows the context of the error, followed by the name of the error, i.e., IndexError, and a brief explanation.

Slicing Lists

We can access sublists as well, a technique is known as slicing.

|

Note that the slice [2:8] goes from element 2 up to (but not including) element 8. Our visualization of lists, with the numbers appearing to the left of their cells, is a helpful way to remember this behavior of slicing:

| What | is | the | airspeed | of | an | unladen | swallow | ? | 0 1 2 3 4 5 6 7 8 9

We can also use slicing to isolate a single item in a list, e.g., sent[3:4]. But by contrast with sent[3], which returns an element of the list sent, slicing always returns a sublist, even though in this case the sublist only contains a single item:

|

Slicing is useful when we want to extract manageable chunks of language from large texts. Here we have loaded the entire text of Monty Python and the Holy Grail, and have sliced out 25 items:

|

As the next example shows,

we can omit the first number if the slice begins at the start of the

list ![[1]](callouts/callout1.gif) , and we can omit the second number if the slice goes to the end

, and we can omit the second number if the slice goes to the end ![[2]](callouts/callout2.gif) :

:

|

We can modify an element of a list by assigning to one of its index values. Consider the following code:

Observe that we put sent[0] on the left of the equals sign ![[1]](callouts/callout1.gif) . We also

replaced an entire slice with new material

. We also

replaced an entire slice with new material ![[2]](callouts/callout2.gif) . A consequence of this

last change is that the list only has four elements, and so trying to access a later value

generated an error

. A consequence of this

last change is that the list only has four elements, and so trying to access a later value

generated an error ![[3]](callouts/callout3.gif) .

.

Negative indexes can be used to refer to elements relative to the end of a list, e.g. sent[-1] gives the last element of sent.

Note

Your Turn: Take a few minutes to define a sentence of your own and modify individual words and groups of words (slices) using the same methods used earlier. Check your understanding by trying the exercises on lists at the end of this chapter.

Variables

Several times now, we have seen lines like the following:

|

Such lines have the form: variable = expression. Python evaluates the expression on the right, and saves its result to the variable on the left. This process is called assignment. It does not generate any output; you have to type the variable on a line by itself in order to inspect its contents. The equals sign is slightly misleading, since information is moving from the right side to the left. It might help to think of it as a left-arrow. The name of the variable can be anything you like, e.g., my_sent, sentence, xyzzy. It must start with a letter, and can include numbers and underscores. Here are some examples of variables and assignments:

|

Remember that uppercase letters are sorted before lowercase letters.

Note

In the previous example we split the definition of my_sent over two lines. Python expressions can be split across multiple lines, so long as this happens within any kind of brackets. Python uses the "..." prompt to indicate that more input is expected. It doesn't matter how much indentation is used in these continuation lines, but some indentation usually makes them easier to read.

It is good to choose meaningful variable names to remind you — and to help anyone else who reads your Python code — what your code is meant to do. Python does not try to make sense of the names; it blindly follows your instructions, and does not object if you do something confusing, such as one = 'two' or two = 3. The only restriction is that a variable name cannot be any of Python's reserved words, such as def, if, not, and import. If you use a reserved word, Python will produce a syntax error:

|

Caution!

Take care with your choice of names (or identifiers) for Python variables. First, you should start the name with a letter, optionally followed by more letters and digits. Thus, abc23 is fine, but 23abc will cause a syntax error. Names are case-sensitive, which means that myVar and myvar are distinct variables. Variable names cannot contain whitespace, but you can separate words using an underscore, e.g., my_var. Be careful not to insert a hyphen instead of an underscore: my-var is wrong, since Python interprets the "-" as a minus sign.

13.3 Vocabularies as Sets of Words

The most obvious fact about texts that emerges from the examples in 4 is that they differ in the vocabulary they use. In this section we will see how to use the computer to count the words in a text in a variety of useful ways. As explained in 4, you will first need to install NLTK's data, and then load the example texts from NLTK's book module, using the command from nltk.book import *.

Let's begin by finding out the length of a text from start to finish, in terms of the words and punctuation symbols that appear. Here we will do it for text3, which contains the text of the Book of Genesis:

|

So Genesis has 44,764 words and punctuation symbols, or "tokens." A token is the technical name for a sequence of characters — such as hairy, his, or :) — that we want to treat as a group. When we count the number of tokens in a text, say, the phrase to be or not to be, we are counting occurrences of these sequences. Thus, in our example phrase there are two occurrences of to, two of be, and one each of or and not. But there are only four distinct vocabulary items in this phrase. How many distinct words does the book of Genesis contain? To work this out in Python, we have to pose the question slightly differently. The vocabulary of a text is just the set of tokens that it uses, since in a set, all duplicates are collapsed together. In Python we can obtain the vocabulary items of text3 with the command: set(text3). When you do this, many screens of words will fly past. Now try the following:

|

By applying Python's built-in sorted function to the expression set(text3)

![[1]](callouts/callout1.gif) , we obtain a sorted list of vocabulary items, beginning

with various punctuation symbols and continuing with words starting with A.

We discover the size of the vocabulary indirectly, by asking

for the number of items in this set, and again we can use len to

obtain this number

, we obtain a sorted list of vocabulary items, beginning

with various punctuation symbols and continuing with words starting with A.

We discover the size of the vocabulary indirectly, by asking

for the number of items in this set, and again we can use len to

obtain this number ![[2]](callouts/callout2.gif) . Although it has 44,764 tokens, this book

has only 2,789 distinct words, or "word types."

A word type is the form or spelling of the word independently of its

specific occurrences in a text — that is, the

word considered as a unique item of vocabulary. Our count of 2,789 items

will include punctuation symbols, so we will generally call these

unique items types instead of word types.

. Although it has 44,764 tokens, this book

has only 2,789 distinct words, or "word types."

A word type is the form or spelling of the word independently of its

specific occurrences in a text — that is, the

word considered as a unique item of vocabulary. Our count of 2,789 items

will include punctuation symbols, so we will generally call these

unique items types instead of word types.

Now, let's calculate a measure of the lexical richness of the text. The next example shows us that the number of distinct words is just 6% of the total number of words, or equivalently that each word is used 16 times on average (remember if you're using Python 2, to start with from __future__ import division).

|

Next, let's focus on particular words. We can count how often a word occurs in a text, and compute what percentage of the text is taken up by a specific word:

|

Note

Your Turn: How many times does the word lol appear in text5? How much is this as a percentage of the total number of words in this text?

Python set objects support a variety of standard set operations as listed in 13.1. We can use these to implement a variety of operations on vocabularies, e.g. unionining multiple vocabularies into a single one, or finding words that occur in one text but not another, and so on.

| Function | Meaning |

|---|---|

| len(s) | the cardinality of the set |

| s.add(x) | add x to s |

| s.remove(x) | remove x from s |

| x in s | test if x is a member of s |

| s <= t | test if s is a subset of t |

| s | t | return the union of s and t |

| s |= t | update s, adding elements from t |

| s & t | return the intersection of s and t |

| s &= t | update s, keeping only elements also found in t |

| s - t | return the difference between s and t |

| s -= t | update s, removing elements found in t |

| s ^ t | return the elements in s or t but not both |

| s ^= t | update s, keeping elements found in either s or t but not both |

| s.isdisjoint() | test if s has no elements in common with t |

13.4 Making Decisions

So far, our little programs have had some interesting qualities: the ability to work with language, and the potential to save effort through automation. A key feature of programming is the ability of machines to make decisions on our behalf, executing instructions when certain conditions are met, or repeatedly looping through text data until some condition is satisfied.

Conditionals

Python supports a wide range of operators, such as < and >=, for testing the relationship between values. The full set of these relational operators is shown in 13.2.

| Operator | Relationship |

|---|---|

| < | less than |

| <= | less than or equal to |

| == | equal to (note this is two "=" signs, not one) |

| != | not equal to |

| > | greater than |

| >= | greater than or equal to |

Each of these operators allow us to express a Python "test" that yields either true or false. Let's verify that these operators do indeed behave in this way:

|

Here are some examples of using the operators to express a criterion, or condition, for selecting different words from a sentence of news text — only the operator is changed from one line to the next. They use the first sentence from the Wall Street Journal corpus, possibly the most well-known sentence in NLP, naming Pierre Vinken of Elsevier Publishers, who was coincidentally an early builder of corpora.

|

There is a common pattern to all of these examples: [w for w in text if condition]. This syntactic form is a Python idiom which defines a new list by performing the same operation on every element of an existing list. In the preceding examples, it goes through each word in sent7, assigning each one in turn to the variable w and testing whether it meets the relevant condition. So the first of the preceding examples could be paraphrased as: "the list which consist of all words w in sent7, as long as the length of w is less than 4".

Note

The notation just described is called a "list comprehension." This is our first example of a Python idiom, a fixed notation that we use habitually without bothering to analyze it each time. Mastering such idioms is an important part of becoming a fluent Python programmer.

In the cases shown in the previous code example, the condition is always a numerical comparison. However, we can also test various properties of words, using the methods listed in 13.3.

| Function | Meaning |

|---|---|

| s.startswith(t) | test if s starts with t |

| s.endswith(t) | test if s ends with t |

| t in s | test if t is a substring of s |

| s.islower() | test if s contains cased characters and all are lowercase |

| s.isupper() | test if s contains cased characters and all are uppercase |

| s.isalpha() | test if s is non-empty and all characters in s are alphabetic |

| s.isalnum() | test if s is non-empty and all characters in s are alphanumeric |

| s.isdigit() | test if s is non-empty and all characters in s are digits |

| s.istitle() | test if s contains cased characters and is titlecased (i.e. all words in s have initial capitals) |

Again, we can do some simple checks that these methods do what we expect.

|

Here are some examples of these operators being used to select words from our texts: words ending with -ableness; words containing gnt; words having an initial capital; and words consisting entirely of digits.

|

We can also create more complex conditions. If c is a condition, then not c is also a condition. If we have two conditions c1 and c2, then we can combine them to form a new condition using conjunction and disjunction: c1 and c2, c1 or c2.

Note

Your Turn: Run the following examples and try to explain what is going on in each one. Next, try to make up some conditions of your own.

|

Operating on Every Element

We can extend the notation from the previous section to perform an operation on every item of a list. For example:

|

These expressions have the form [f(w) for ...] or [w.f() for ...], where f is a function that operates on a word to compute its length, or to convert it to uppercase. For now, you don't need to understand the difference between the notations f(w) and w.f().

Let's return to the question of vocabulary size, and apply the same idiom here:

|

Now that we are not double-counting words like This and this, which differ only in capitalization, we've wiped 2,000 off the vocabulary count! We can go a step further and eliminate numbers and punctuation from the vocabulary count by filtering out any non-alphabetic items:

|

This example is slightly complicated: it lowercases all the purely alphabetic items. Perhaps it would have been simpler just to count the lowercase-only items, but this gives the wrong answer (why?).

Don't worry if you don't feel confident with list comprehensions yet. You'll see more examples along with explanations here, and in the body of the book.

Nested Code Blocks

Most programming languages permit us to execute a block of code when a conditional expression, or if statement, is satisfied. We already saw examples of conditional tests in code like [w for w in sent7 if len(w) < 4]. In the following program, we have created a variable called word containing the string value 'cat'. The if statement checks whether len(word) < 5. It is, so the body of the if statement is invoked and the print statement is executed, displaying a message to the user. Remember to indent the print statement by typing four spaces.

|

When we use the Python interpreter we have to add an extra blank line ![[1]](callouts/callout1.gif) in order for it to detect that the nested block is complete.

in order for it to detect that the nested block is complete.

If we change the conditional test to len(word) >= 5, to check that the length of word is greater than or equal to 5, then the test will no longer be true. This time, the body of the if statement will not be executed, and no message is shown to the user:

|

An if statement is known as a control structure because it controls whether the code in the indented block will be run. Another control structure is the for loop. Try the following, and remember to include the colon and the four spaces:

|

This is called a loop because Python executes the code in circular fashion. It starts by performing the assignment word = 'Call', effectively using the word variable to name the first item of the list. Then, it displays the value of word to the user. Next, it goes back to the for statement, and performs the assignment word = 'me', before displaying this new value to the user, and so on. It continues in this fashion until every item of the list has been processed.

Looping with Conditions

Now we can combine the if and for statements. We will loop over every item of the list, and print the item only if it ends with the letter l. We'll pick another name for the variable to demonstrate that Python doesn't try to make sense of variable names.

|

You will notice that if and for statements have a colon at the end of the line, before the indentation begins. In fact, all Python control structures end with a colon. The colon indicates that the current statement relates to the indented block that follows.

We can also specify an action to be taken if the condition of the if statement is not met. Here we see the elif (else if) statement, and the else statement. Notice that these also have colons before the indented code.

|

As you can see, even with this small amount of Python knowledge, you can start to build multiline Python programs. It's important to develop such programs in pieces, testing that each piece does what you expect before combining them into a program. This is why the Python interactive interpreter is so invaluable, and why you should get comfortable using it.

Finally, let's combine the idioms we've been exploring. First, we create a list of cie and cei words, then we loop over each item and print it. Notice the extra information given in the print statement: end=' '. This tells Python to print a space (not the default newline) after each word.

|

13.5 Sequences

So far, the only data type we have worked with is the list data type. Lists are one of several kinds of sequence data type. There are two other important kinds of sequence that you need to know about: strings and tuples.

Strings

Some of the methods we used to access the elements of a list also work with individual words, or strings.

Here, we assigned a string to a variable ![[1]](callouts/callout1.gif) ,

indexed a string

,

indexed a string ![[2]](callouts/callout2.gif) , and sliced a string

, and sliced a string ![[3]](callouts/callout3.gif) .

We can also perform multiplication and addition with strings:

.

We can also perform multiplication and addition with strings:

|

We can join the words of a list to make a single string, or split a string into a list, as follows:

|

Tuples

Another kind of sequence is called a tuple.

Tuples are formed with the comma operator ![[1]](callouts/callout1.gif) , and typically enclosed

in parentheses. Like lists and strings, tuples can be indexed

, and typically enclosed

in parentheses. Like lists and strings, tuples can be indexed ![[2]](callouts/callout2.gif) and sliced

and sliced ![[3]](callouts/callout3.gif) , and have a length

, and have a length ![[4]](callouts/callout4.gif) .

.

|

Caution!

Tuples are constructed using the comma operator. Parentheses are a more general feature of Python syntax, designed for grouping. A tuple containing the single element 'snark' is defined by adding a trailing comma, like this: "'snark',". The empty tuple is a special case, and is defined using empty parentheses ().

Let's compare strings, lists and tuples directly, and do the indexing, slice, and length operation on each type:

|

Notice in this code sample that we computed multiple values on a single line, separated by commas. These comma-separated expressions are actually just tuples — Python allows us to omit the parentheses around tuples if there is no ambiguity. When we print a tuple, the parentheses are always displayed.

Operating on Sequence Types

We can iterate over the items in a sequence s in a variety of useful ways, as shown in 13.4.

| Python Expression | Comment |

|---|---|

| for item in s | iterate over the items of s |

| for item in sorted(s) | iterate over the items of s in order |

| for item in set(s) | iterate over unique elements of s |

| for item in reversed(s) | iterate over elements of s in reverse |

| for item in set(s).difference(t) | iterate over elements of s not in t |

The sequence functions illustrated in 13.4 can be combined in various ways; for example, to get unique elements of s sorted in reverse, use reversed(sorted(set(s))). We can randomize the contents of a list s before iterating over them, using random.shuffle(s).

We can convert between these sequence types. For example, tuple(s) converts any kind of sequence into a tuple, and list(s) converts any kind of sequence into a list. We can convert a list of strings to a single string using the join() function, e.g. ':'.join(words).

In the next example, we use tuples to re-arrange the contents of our list. (We can omit the parentheses because the comma has higher precedence than assignment.)

|

This is an idiomatic and readable way to move items inside a list. It is equivalent to the following traditional way of doing such tasks that does not use tuples (notice that this method needs a temporary variable tmp).

|

As we have seen, Python has sequence functions such as sorted() and reversed() that rearrange the items of a sequence. There are also functions that modify the structure of a sequence and which can be handy for language processing. Thus, zip() takes the items of two or more sequences and "zips" them together into a single list of tuples. Given a sequence s, enumerate(s) returns pairs consisting of an index and the item at that index.

|

Note

It is a widespread feature of Python 3 and NLTK 3 to only perform computation when required (a feature known as "lazy evaluation"). If you ever see a result like <zip object at 0x10d005448> when you expect to see a sequence, you can force the object to be evaluated just by putting it in a context that expects a sequence, like list(x), or for item in x.

Lists vs Tuples

A list is typically a sequence of objects all having the same type, of arbitrary length. We often use lists to hold sequences of words. In contrast, a tuple is typically a collection of objects of different types, of fixed length. We often use a tuple to hold a record, aggregating the properties of an object.

A further difference between lists and tuples in Python is that lists are mutable, while tuples are immutable. In other words, lists can be modified, while tuples cannot.

A prototypical example of a tuple is a lexical entry, with its headword, part of speech, and definition. If there was more than one definition, we would model this using a list. The lexicon itself could also be modeled as a list:

|

13.6 Dictionaries

A dictionary, also known as a mapping, associative array or hash array, is a data type for storing correspondences between keys and values. For instance, we could define a dictionary that maps from words to their definitions (like a real-world dictionary). But we could also map from a word to its part of speech, or a word and its frequency, and so forth.

Lists vs Dictionaries

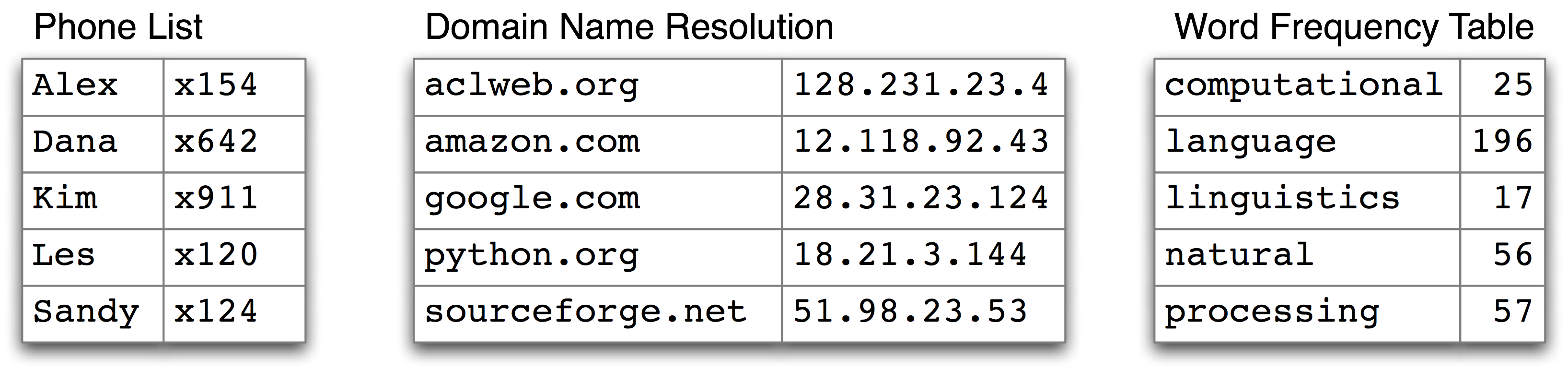

A text, as we have seen, is treated in Python as a list of words. An important property of lists is that we can "look up" a particular item by giving its index, e.g. text1[100]. Notice how we specify a number, and get back a word. We can think of a list as a simple kind of table, as shown in 13.1.

Figure 13.1: List Look-up: we access the contents of a Python list with the help of an integer index.

Contrast this situation with frequency distributions (4), where we specify a word, and get back a number, e.g. fdist['monstrous'], which tells us the number of times a given word has occurred in a text. Look-up using words is familiar to anyone who has used a dictionary. Some more examples are shown in 13.2.

Figure 13.2: Dictionary Look-up: we access the entry of a dictionary using a key such as someone's name, a web domain, or an English word; other names for dictionary are map, hashmap, hash, and associative array.

In the case of a phonebook, we look up an entry using a name, and get back a number. When we type a domain name in a web browser, the computer looks this up to get back an IP address. A word frequency table allows us to look up a word and find its frequency in a text collection. In all these cases, we are mapping from names to numbers, rather than the other way around as with a list. In general, we would like to be able to map between arbitrary types of information. 13.5 lists a variety of linguistic objects, along with what they map.

| Linguistic Object | Maps From | Maps To |

|---|---|---|

| Document Index | Word | List of pages (where word is found) |

| Thesaurus | Word sense | List of synonyms |

| Dictionary | Headword | Entry (part-of-speech, sense definitions, etymology) |

| Comparative Wordlist | Gloss term | Cognates (list of words, one per language) |

| Morph Analyzer | Surface form | Morphological analysis (list of component morphemes) |

Most often, we are mapping from a "word" to some structured object. For example, a document index maps from a word (which we can represent as a string), to a list of pages (represented as a list of integers). In this section, we will see how to represent such mappings in Python.

Dictionaries in Python

Python provides a dictionary data type that can be used for mapping between arbitrary types. It is like a conventional dictionary, in that it gives you an efficient way to look things up. However, as we see from 13.5, it has a much wider range of uses.

To illustrate, we define pos to be an empty dictionary and then add four entries to it, specifying the part-of-speech of some words. We add entries to a dictionary using the familiar square bracket notation:

|

So, for example, ![[1]](callouts/callout1.gif) says that

the part-of-speech of colorless is adjective, or more

specifically, that the key 'colorless'

is assigned the value 'ADJ' in dictionary pos.

When we inspect the value of pos

says that

the part-of-speech of colorless is adjective, or more

specifically, that the key 'colorless'

is assigned the value 'ADJ' in dictionary pos.

When we inspect the value of pos ![[2]](callouts/callout2.gif) we see

a set of key-value pairs. Once we have populated the dictionary

in this way, we can employ the keys to retrieve values:

we see

a set of key-value pairs. Once we have populated the dictionary

in this way, we can employ the keys to retrieve values:

|

Of course, we might accidentally use a key that hasn't been assigned a value.

|

This raises an important question. Unlike lists and strings, where we

can use len() to work out which integers will be legal indexes,

how do we work out the legal keys for a dictionary? If the dictionary

is not too big, we can simply inspect its contents by evaluating the

variable pos. As we saw above (line ![[2]](callouts/callout2.gif) ), this gives

us the key-value pairs. Notice that they are not in the same order they

were originally entered; this is because dictionaries are not sequences

but mappings (cf. 13.2), and the keys are not inherently

ordered.

), this gives

us the key-value pairs. Notice that they are not in the same order they

were originally entered; this is because dictionaries are not sequences

but mappings (cf. 13.2), and the keys are not inherently

ordered.

Alternatively, to just find the keys, we can convert the

dictionary to a list ![[1]](callouts/callout1.gif) — or use

the dictionary in a context where a list is expected,

as the parameter of sorted()

— or use

the dictionary in a context where a list is expected,

as the parameter of sorted() ![[2]](callouts/callout2.gif) ,

or in a for loop

,

or in a for loop ![[3]](callouts/callout3.gif) .

.

|

Note

When you type list(pos) you might see a different order to the one shown above. If you want to see the keys in order, just sort them.

As well as iterating over all keys in the dictionary with a for loop, we can use the for loop as we did for printing lists:

|

Finally, the dictionary methods keys(), values() and

items() allow us to access the keys, values, and key-value pairs as separate lists.

(For technical reasons that will not concern us here, we have to

explicitly convert the results of these methods to lists.)

We can even sort tuples ![[1]](callouts/callout1.gif) , which orders them according to their first element

(and if the first elements are the same, it uses their second elements).

, which orders them according to their first element

(and if the first elements are the same, it uses their second elements).

|

We want to be sure that when we look something up in a dictionary, we only get one value for each key. Now suppose we try to use a dictionary to store the fact that the word sleep can be used as both a verb and a noun:

|

Initially, pos['sleep'] is given the value 'V'. But this is immediately overwritten with the new value 'N'. In other words, there can only be one entry in the dictionary for 'sleep'. However, there is a way of storing multiple values in that entry: we use a list value, e.g. pos['sleep'] = ['N', 'V']. In fact, this is what we saw in 3 for the CMU Pronouncing Dictionary, which stores multiple pronunciations for a single word.

Defining Dictionaries

We can use the same key-value pair format to create a dictionary. There's a couple of ways to do this, and we will normally use the first:

|

Note that dictionary keys must be immutable types, such as strings and tuples. If we try to define a dictionary using a mutable key, we get a TypeError:

|

Default Dictionaries

If we try to access a key that is not in a dictionary, we get an error. However, its often useful if a dictionary can automatically create an entry for this new key and give it a default value, such as zero or the empty list. For this reason, a special kind of dictionary called a defaultdict is available. In order to use it, we have to supply a parameter which can be used to create the default value, e.g. int, float, str, list, dict, tuple.

|

Note

These default values are actually functions that convert other objects to the specified type (e.g. int("2"), list("2")). When they are called with no parameter — int(), list() — they return 0 and [] respectively.

The above examples specified the default value of a dictionary entry to

be the default value of a particular data type. However, we can specify

any default value we like, simply by providing the name of a function

that can be called with no arguments to create the required value.

Let's return to our part-of-speech example, and create a dictionary

whose default value for any entry is 'N' ![[1]](callouts/callout1.gif) .

When we access a non-existent entry

.

When we access a non-existent entry ![[2]](callouts/callout2.gif) ,

it is automatically added to the dictionary

,

it is automatically added to the dictionary ![[3]](callouts/callout3.gif) .

.

|

Note

The above example used a lambda expression, to be introduced in 13.8. This lambda expression specifies no parameters, so we call it using parentheses with no arguments. Thus, the definitions of f and g below are equivalent:

|

Let's see how default dictionaries could be used in a more substantial language processing task. Many language processing tasks — including tagging — struggle to correctly process the hapaxes of a text. They can perform better with a fixed vocabulary and a guarantee that no new words will appear. We can preprocess a text to replace low-frequency words with a special "out of vocabulary" token UNK, with the help of a default dictionary. (Can you work out how to do this without reading on?)

We need to create a default dictionary that maps each word to its replacement. The most frequent n words will be mapped to themselves. Everything else will be mapped to UNK.

|

Incrementally Updating a Dictionary

We can employ dictionaries to count occurrences, emulating the method for tallying words shown in fig-tally. We begin by initializing an empty defaultdict, then process each part-of-speech tag in the text. If the tag hasn't been seen before, it will have a zero count by default. Each time we encounter a tag, we increment its count using the += operator.

| ||

Example 13.3 (code_dictionary.py): Figure 13.3: Incrementally Updating a Dictionary, and Sorting by Value |

The listing in 13.3 illustrates an important idiom for sorting a dictionary by its values, to show words in decreasing order of frequency. The first parameter of sorted() is the items to sort, a list of tuples consisting of a POS tag and a frequency. The second parameter specifies the sort key using a function itemgetter(). In general, itemgetter(n) returns a function that can be called on some other sequence object to obtain the nth element, e.g.:

|

The last parameter of sorted() specifies that the items should be returned in reverse order, i.e. decreasing values of frequency.

There's a second useful programming idiom at the beginning of 13.3, where we initialize a defaultdict and then use a for loop to update its values. Here's a schematic version:

Here's another instance of this pattern, where we index words according to their last two letters:

|

The following example uses the same pattern to create an anagram dictionary. (You might experiment with the third line to get an idea of why this program works.)

|

Since accumulating words like this is such a common task, NLTK provides a more convenient way of creating a defaultdict(list), in the form of nltk.Index().

|

Note

nltk.Index is a defaultdict(list) with extra support for initialization. Similarly, nltk.FreqDist is essentially a defaultdict(int) with extra support for initialization (along with sorting and plotting methods).

Complex Keys and Values

We can use default dictionaries with complex keys and values. Let's study the range of possible tags for a word, given the word itself, and the tag of the previous word. We will see how this information can be used by a POS tagger.

|

This example uses a dictionary whose default value for an entry

is a dictionary (whose default value is int(), i.e. zero).

Notice how we iterated over the bigrams of the tagged

corpus, processing a pair of word-tag pairs for each iteration ![[1]](callouts/callout1.gif) .

Each time through the loop we updated our pos dictionary's

entry for (t1, w2), a tag and its following word

.

Each time through the loop we updated our pos dictionary's

entry for (t1, w2), a tag and its following word ![[2]](callouts/callout2.gif) .

When we look up an item in pos we must specify a compound key

.

When we look up an item in pos we must specify a compound key ![[3]](callouts/callout3.gif) ,

and we get back a dictionary object.

A POS tagger could use such information to decide that the

word right, when preceded by a determiner, should be tagged as ADJ.

,

and we get back a dictionary object.

A POS tagger could use such information to decide that the

word right, when preceded by a determiner, should be tagged as ADJ.

Inverting a Dictionary

Dictionaries support efficient lookup, so long as you want to get the value for any key. If d is a dictionary and k is a key, we type d[k] and immediately obtain the value. Finding a key given a value is slower and more cumbersome:

|

If we expect to do this kind of "reverse lookup" often, it helps to construct a dictionary that maps values to keys. In the case that no two keys have the same value, this is an easy thing to do. We just get all the key-value pairs in the dictionary, and create a new dictionary of value-key pairs. The next example also illustrates another way of initializing a dictionary pos with key-value pairs.

|

Let's first make our part-of-speech dictionary a bit more realistic and add some more words to pos using the dictionary update() method, to create the situation where multiple keys have the same value. Then the technique just shown for reverse lookup will no longer work (why not?). Instead, we have to use append() to accumulate the words for each part-of-speech, as follows:

|

Now we have inverted the pos dictionary, and can look up any part-of-speech and find all words having that part-of-speech. We can do the same thing even more simply using NLTK's support for indexing as follows:

|

A summary of Python's dictionary methods is given in 13.6.

| Example | Description |

|---|---|

| d = {} | create an empty dictionary and assign it to d |

| d[key] = value | assign a value to a given dictionary key |

| d.keys() | the list of keys of the dictionary |

| list(d) | the list of keys of the dictionary |

| sorted(d) | the keys of the dictionary, sorted |

| key in d | test whether a particular key is in the dictionary |

| for key in d | iterate over the keys of the dictionary |

| d.values() | the list of values in the dictionary |

| dict([(k1,v1), (k2,v2), ...]) | create a dictionary from a list of key-value pairs |

| d1.update(d2) | add all items from d2 to d1 |

| defaultdict(int) | a dictionary whose default value is zero |

13.7 Reusing Code

By this time you've probably typed and retyped a lot of code in the Python interactive interpreter. If you mess up when retyping a complex example you have to enter it again. Using the arrow keys to access and modify previous commands is helpful but only goes so far. In this section we see two important ways to reuse code: text editors and Python functions.

Creating Programs with a Text Editor

The Python interactive interpreter performs your instructions as soon as you type them. Often, it is better to compose a multi-line program using a text editor, then ask Python to run the whole program at once. Using IDLE, you can do this by going to the File menu and opening a new window. Try this now, and enter the following one-line program:

print('Monty Python')

Save this program in a file called monty.py, then go to the Run menu, and select the command Run Module. (We'll learn what modules are shortly.) The result in the main IDLE window should look like this:

|

You can also type from monty import * and it will do the same thing.

From now on, you have a choice of using the interactive interpreter or a text editor to create your programs. It is often convenient to test your ideas using the interpreter, revising a line of code until it does what you expect. Once you're ready, you can paste the code (minus any >>> or ... prompts) into the text editor, continue to expand it, and finally save the program in a file so that you don't have to type it in again later. Give the file a short but descriptive name, using all lowercase letters and separating words with underscore, and using the .py filename extension, e.g., monty_python.py.

Note

Important: Our inline code examples include the >>> and ... prompts as if we are interacting directly with the interpreter. As they get more complicated, you should instead type them into the editor, without the prompts, and run them from the editor as shown above. When we provide longer programs in this book, we will leave out the prompts to remind you to type them into a file rather than using the interpreter. You can see this already in 2.1 above. Note that it still includes a couple of lines with the Python prompt; this is the interactive part of the task where you inspect some data and invoke a function. Remember that all code samples like 2.1 are downloadable from http://nltk.org/.

Functions

Suppose that you work on analyzing text that involves different forms of the same word, and that part of your program needs to work out the plural form of a given singular noun. Suppose it needs to do this work in two places, once when it is processing some texts, and again when it is processing user input.

Rather than repeating the same code several times over, it is more efficient and reliable to localize this work inside a function. A function is just a named block of code that performs some well-defined task, as we saw in 13.3. A function is usually defined to take some inputs, using special variables known as parameters, and it may produce a result, also known as a return value. We define a function using the keyword def followed by the function name and any input parameters, followed by the body of the function.

|

In the definition of lexical_diversity(), we specify a parameter named text. This parameter is a "placeholder" for the actual text whose lexical diversity we want to compute, and reoccurs in the block of code that will run when the function is used.

We use the keyword return to indicate the value that is produced as output by the function. In the above example, all the work of the function is done in the return statement. Here's an equivalent definition which does the same work using multiple lines of code. We'll change the parameter name from text to my_text_data to remind you that this is an arbitrary choice:

|

Notice that we've created some new variables inside the body of the function. These are local variables and are not accessible outside the function. So now we have defined a function with the name lexical_diversity. But just defining it won't produce any output. Functions do nothing until they are "called" (or "invoked"). Let's compare the lexical diversity of the Book of Genesis with the lexical diversity of the Chat Corpus:

|

To recap, we use or call a function such as lexical_diversity() by typing its name, followed by an open parenthesis, the name of the text, and then a close parenthesis. These parentheses will show up often; their role is to separate the name of a task — such as lexical_diversity() — from the data that the task is to be performed on — such as text3. The data value that we place in the parentheses when we call a function is an argument to the function.

You have already encountered several functions, such as len() and sorted(). In our running prose, we will add an empty pair of parentheses after a function name, to make clear that we are talking about a function rather than some other kind of Python expression. Functions are an important concept in programming, and we only mention them at this point to give you a sense of the power and creativity of programming. Don't worry if you find it a bit confusing at first.

Later we'll see how to use functions when tabulating data, as in 13.7. Each row of the table will involve the same computation but with different data, and we'll do this repetitive work using a function.

| Genre | Tokens | Types | Lexical diversity |

|---|---|---|---|

| skill and hobbies | 82345 | 11935 | 0.145 |

| humor | 21695 | 5017 | 0.231 |

| fiction: science | 14470 | 3233 | 0.223 |

| press: reportage | 100554 | 14394 | 0.143 |

| fiction: romance | 70022 | 8452 | 0.121 |

| religion | 39399 | 6373 | 0.162 |

Let's return to our earlier scenario, and actually define a simple function to work out English plurals. The function plural() in 13.4 takes a singular noun and generates a plural form, though it is not always correct. (We'll discuss functions at greater length in 13.8.)

| ||

| ||

Example 13.4 (code_plural.py): Figure 13.4: A Python Function: this function tries to work out the plural form of any English noun; the keyword def (define) is followed by the function name, then a parameter inside parentheses, and a colon; the body of the function is the indented block of code; it tries to recognize patterns within the word and process the word accordingly; e.g., if the word ends with y, delete the y and add ies. |

The endswith() function is always associated with a string object (e.g., word in 13.4). To call such functions, we give the name of the object, a period, and then the name of the function. These functions are usually known as methods.

Modules

Over time you will find that you create a variety of useful little text processing functions, and you end up copying them from old programs to new ones. Which file contains the latest version of the function you want to use? It makes life a lot easier if you can collect your work into a single place, and access previously defined functions without making copies.

To do this, save your function(s) in a file called (say) text_proc.py. Now, you can access your work simply by importing it from the file:

|

Our plural function obviously has an error, since the plural of fan is fans. Instead of typing in a new version of the function, we can simply edit the existing one. Thus, at every stage, there is only one version of our plural function, and no confusion about which one is being used.

A collection of variable and function definitions in a file is called a Python module. A collection of related modules is called a package. NLTK's code for processing the Brown Corpus is an example of a module, and its collection of code for processing all the different corpora is an example of a package. NLTK itself is a set of packages, sometimes called a library.

Caution!

If you are creating a file to contain some of your Python code, do not name your file nltk.py: it may get imported in place of the "real" NLTK package. When it imports modules, Python first looks in the current directory (folder).

13.8 More on Functions

Functions provide an effective way to package and re-use program code, as already explained in 13.7. Functions allow us to abstract away from the details, to see a bigger picture, and to program more effectively.

Our choice of name for the function makes the program more readable by others, both where the function is defined, and where it is used.

Functions help make our programs more reliable. When we re-use code that has already been developed and tested, we can be more confident that it handles a variety of cases correctly. We also remove the risk that we forget some important step, or introduce a bug. The program that calls our function also has increased reliability. The author of that program is dealing with a shorter program, and its components behave transparently.

The rest of this section takes a closer look at functions, exploring the mechanics and discussing ways to make your programs easier to read.

Function Inputs and Outputs

We pass information to functions using a function's parameters, e.g.:

|

We first define the function to take two parameters, msg and num

![[1]](callouts/callout1.gif) . Then we call the function and pass it two arguments, monty and 3

. Then we call the function and pass it two arguments, monty and 3

![[2]](callouts/callout2.gif) ; these arguments fill the "placeholders" provided by the parameters and

provide values for the occurrences of msg and num in the function body.

; these arguments fill the "placeholders" provided by the parameters and

provide values for the occurrences of msg and num in the function body.

It is not necessary to have any parameters, as we see in the following example:

|

A function usually communicates its results back to the calling program via the return statement, as we have just seen. To the calling program, it looks as if the function call had been replaced with the function's result, e.g.:

|

A Python function is not required to have a return statement. Some functions do their work as a side effect, printing a result, modifying a file, or updating the contents of a parameter to the function (such functions are called "procedures" in some other programming languages).

Parameter Passing

Python interprets function parameters as values (this is known as call-by-value). In the following code, set_up() has two parameters, both of which are modified inside the function. We begin by assigning an empty string to w and an empty list to p. After calling the function, w is unchanged, while p is changed:

|

Notice that w was not changed by the function. When we called set_up(w, p), the value of w (an empty string) was assigned to a new variable word. Inside the function, the value of word was modified. However, that change did not propagate to w. This parameter passing is identical to the following sequence of assignments:

|

Let's look at what happened with the list p. When we called set_up(w, p), the value of p (a reference to an empty list) was assigned to a new local variable properties, so both variables now reference the same memory location. The function modifies properties, and this change is also reflected in the value of p as we saw. The function also assigned a new value to properties (the number 5); this did not modify the contents at that memory location, but created a new local variable. This behavior is just as if we had done the following sequence of assignments:

|

Thus, to understand Python's call-by-value parameter passing, it is enough to understand how assignment works. Remember that you can use the id() function and is operator to check your understanding of object identity after each statement.

Variable Scope

Function definitions create a new, local scope for variables. When you assign to a new variable inside the body of a function, the name is only defined within that function. The name is not visible outside the function, or in other functions. This behavior means you can choose variable names without being concerned about collisions with names used in your other function definitions.

When you refer to an existing name from within the body of a function, the Python interpreter first tries to resolve the name with respect to the names that are local to the function. If nothing is found, the interpreter checks if it is a global name within the module. Finally, if that does not succeed, the interpreter checks if the name is a Python built-in. This is the so-called LGB rule of name resolution: local, then global, then built-in.

Caution!

A function can enable access to a global variable using the global declaration. However, this practice should be avoided as much as possible. Defining global variables inside a function introduces dependencies on context and limits the portability (or reusability) of the function. In general you should use parameters for function inputs and return values for function outputs.

Checking Parameter Types

Python does not allow us to declare the type of a variable when we write a program, and this permits us to define functions that are flexible about the type of their arguments. For example, a tagger might expect a sequence of words, but it wouldn't care whether this sequence is expressed as a list or a tuple (or an iterator, another sequence type that is outside the scope of the current discussion).

However, often we want to write programs for later use by others, and want to program in a defensive style, providing useful warnings when functions have not been invoked correctly. The author of the following tag() function assumed that its argument would always be a string.

|

The function returns sensible values for the arguments 'the' and 'knight',

but look what happens when it is passed a list ![[1]](callouts/callout1.gif) — it fails to

complain, even though the result which it returns is clearly incorrect.

The author of this function could take some extra steps to

ensure that the word parameter of the tag() function is a string.

A naive approach would be to check the type of the argument using

if not type(word) is str, and if word is not a string, to simply

return Python's special empty value, None. This is a slight improvement, because

the function is checking the type of the argument, and trying to return a "special", diagnostic

value for the wrong input.

However, it is also dangerous because the calling program

may not detect that None is intended as a "special" value, and this diagnostic

return value may then be

propagated to other parts of the program with unpredictable consequences.

Here's a better solution, using an assert statement:

— it fails to

complain, even though the result which it returns is clearly incorrect.

The author of this function could take some extra steps to

ensure that the word parameter of the tag() function is a string.

A naive approach would be to check the type of the argument using

if not type(word) is str, and if word is not a string, to simply

return Python's special empty value, None. This is a slight improvement, because

the function is checking the type of the argument, and trying to return a "special", diagnostic

value for the wrong input.

However, it is also dangerous because the calling program

may not detect that None is intended as a "special" value, and this diagnostic

return value may then be

propagated to other parts of the program with unpredictable consequences.

Here's a better solution, using an assert statement:

|

If the assert statement fails, it will produce an error that cannot be ignored, since it halts program execution. Additionally, the error message is easy to interpret. Adding assertions to a program helps you find logical errors, and is a kind of defensive programming. A more fundamental approach is to document the parameters to each function using docstrings as described later in this section.

Accumulative Functions

These functions start by initializing some storage, and iterate over input to build it up, before returning some final object (a large structure or aggregated result). A standard way to do this is to initialize an empty list, accumulate the material, then return the list, as shown in function search1() in 13.5.

| ||

| ||

Example 13.5 (code_search_examples.py): Figure 13.5: Accumulating Output into a List |

The function search2() is a generator. The first time this function is called, it gets as far as the yield statement and pauses. The calling program gets the first word and does any necessary processing. Once the calling program is ready for another word, execution of the function is continued from where it stopped, until the next time it encounters a yield statement. This approach is typically more efficient, as the function only generates the data as it is required by the calling program, and does not need to allocate additional memory to store the output (cf. our discussion of generator expressions above).

Here's a more sophisticated example of a generator which produces

all permutations of a list of words. In order to force the permutations()

function to generate all its output, we wrap it with a call to list() ![[1]](callouts/callout1.gif) .

.

|

Note

The permutations function uses a technique called recursion, discussed below in sec-algorithm-design_. The ability to generate permutations of a set of words is useful for creating data to test a grammar (8.).

Higher-Order Functions

Python provides some higher-order functions that are standard features of functional programming languages such as Haskell. We illustrate them here, alongside the equivalent expression using list comprehensions.

Let's start by defining a function is_content_word() which checks whether a word is from the open class of content words. We use this function as the first parameter of filter(), which applies the function to each item in the sequence contained in its second parameter, and only retains the items for which the function returns True.

|

Another higher-order function is map(), which applies a function to every item in a sequence. Here is a simple way to find the average length of a sentence in the news section of the Brown Corpus, followed by an equivalent version with list comprehension calculation:

|

In the above examples we specified a user-defined function is_content_word() and a built-in function len(). We can also provide a lambda expression. Here's a pair of equivalent examples which count the number of vowels in each word.

|

The solutions based on list comprehensions are usually more readable than the solutions based on higher-order functions, and we have favored the former approach throughout this book.

Named Arguments

When there are a lot of parameters it is easy to get confused about the correct order. Instead we can refer to parameters by name, and even assign them a default value just in case one was not provided by the calling program. Now the parameters can be specified in any order, and can be omitted.

|

These are called keyword arguments. If we mix these two kinds of parameters, then we must ensure that the unnamed parameters precede the named ones. It has to be this way, since unnamed parameters are defined by position. We can define a function that takes an arbitrary number of unnamed and named parameters, and access them via an in-place list of arguments *args and an "in-place dictionary" of keyword arguments **kwargs. (Dictionaries will be presented in 13.6.)

|

When *args appears as a function parameter, it actually corresponds to all the unnamed parameters of the function. Here's another illustration of this aspect of Python syntax, for the zip() function which operates on a variable number of arguments. We'll use the variable name *song to demonstrate that there's nothing special about the name *args.

|

It should be clear from the above example that typing *song is just a convenient shorthand, and equivalent to typing out song[0], song[1], song[2].

Here's another example of the use of keyword arguments in a function definition, along with three equivalent ways to call the function:

|

A side-effect of having named arguments is that they permit optionality. Thus we can leave out any arguments where we are happy with the default value: freq_words('ch01.rst', min=4), freq_words('ch01.rst', 4). Another common use of optional arguments is to permit a flag. Here's a revised version of the same function that reports its progress if a verbose flag is set:

|

Caution!

Take care not to use a mutable object as the default value of a parameter. A series of calls to the function will use the same object, sometimes with bizarre results as we will see in the discussion of debugging below.

Caution!

If your program will work with a lot of files, it is a good idea to close any open files once they are no longer required. Python will close open files automatically if you use the with statement:

|

13.9 Exercises

☼ Try using the Python interpreter as a calculator, and typing expressions like 12 / (4 + 1).

☼ Given an alphabet of 26 letters, there are 26 to the power 10, or 26 ** 10, ten-letter strings we can form. That works out to 141167095653376L (the L at the end just indicates that this is Python's long-number format). How many hundred-letter strings are possible?

☼ The Python multiplication operation can be applied to lists. What happens when you type ['Monty', 'Python'] * 20, or 3 * sent1?

☼ Review 13.3 on vocabularies as sets of words. How many words are there in text2? How many distinct words are there?

☼ Compare the lexical diversity scores for humor and romance fiction in 13.7. Which genre is more lexically diverse?

☼ Produce a dispersion plot of the four main protagonists in Sense and Sensibility: Elinor, Marianne, Edward, and Willoughby. What can you observe about the different roles played by the males and females in this novel? Can you identify the couples?

☼ Find the collocations in text5.

☼ Consider the following Python expression: len(set(text4)). State the purpose of this expression. Describe the two steps involved in performing this computation.

☼ Review 13.2 on lists and strings.

- Define a string and assign it to a variable, e.g., my_string = 'My String' (but put something more interesting in the string). Print the contents of this variable in two ways, first by simply typing the variable name and pressing enter, then by using the print statement.

- Try adding the string to itself using my_string + my_string, or multiplying it by a number, e.g., my_string * 3. Notice that the strings are joined together without any spaces. How could you fix this?

☼ Define a variable my_sent to be a list of words, using the syntax my_sent = ["My", "sent"] (but with your own words, or a favorite saying).

- Use ' '.join(my_sent) to convert this into a string.

- Use split() to split the string back into the list form you had to start with.

☼ Define several variables containing lists of words, e.g., phrase1, phrase2, and so on. Join them together in various combinations (using the plus operator) to form whole sentences. What is the relationship between len(phrase1 + phrase2) and len(phrase1) + len(phrase2)?

☼ Consider the following two expressions, which have the same value. Which one will typically be more relevant in NLP? Why?

- "Monty Python"[6:12]

- ["Monty", "Python"][1]

☼ We have seen how to represent a sentence as a list of words, where each word is a sequence of characters. What does sent1[2][2] do? Why? Experiment with other index values.

☼ The first sentence of text3 is provided to you in the variable sent3. The index of the in sent3 is 1, because sent3[1] gives us 'the'. What are the indexes of the two other occurrences of this word in sent3?

☼ Review the discussion of conditionals in 13.4. Find all words in the Chat Corpus (text5) starting with the letter b. Show them in alphabetical order.

☼ Type the expression list(range(10)) at the interpreter prompt. Now try list(range(10, 20)), list(range(10, 20, 2)), and list(range(20, 10, -2)). We will see a variety of uses for this built-in function in later chapters.

☼ Find out more about sequence objects using Python's help facility. In the interpreter, type help(str), help(list), and help(tuple). This will give you a full list of the functions supported by each type. Some functions have special names flanked with underscore; as the help documentation shows, each such function corresponds to something more familiar. For example x.__getitem__(y) is just a long-winded way of saying x[y].

☼ Identify three operations that can be performed on both tuples and lists. Identify three list operations that cannot be performed on tuples. Name a context where using a list instead of a tuple generates a Python error.

☼ Find out how to create a tuple consisting of a single item. There are at least two ways to do this.

☼ Create a list words = ['is', 'NLP', 'fun', '?']. Use a series of assignment statements (e.g. words[1] = words[2]) and a temporary variable tmp to transform this list into the list ['NLP', 'is', 'fun', '!']. Now do the same transformation using tuple assignment.

☼ Does the method for creating a sliding window of n-grams behave correctly for the two limiting cases: n = 1, and n = len(sent)?

☼ We pointed out that when empty strings and empty lists occur in the condition part of an if clause, they evaluate to False. In this case, they are said to be occurring in a Boolean context. Experiment with different kind of non-Boolean expressions in Boolean contexts, and see whether they evaluate as True or False.

☼ Use the inequality operators to compare strings, e.g. 'Monty' < 'Python'. What happens when you do 'Z' < 'a'? Try pairs of strings which have a common prefix, e.g. 'Monty' < 'Montague'. Read up on "lexicographical sort" in order to understand what is going on here. Try comparing structured objects, e.g. ('Monty', 1) < ('Monty', 2). Does this behave as expected?

☼ Write code that removes whitespace at the beginning and end of a string, and normalizes whitespace between words to be a single space character.

- do this task using split() and join()

- do this task using regular expression substitutions

◑ Use text9.index() to find the index of the word sunset. You'll need to insert this word as an argument between the parentheses. By a process of trial and error, find the slice for the complete sentence that contains this word.

◑ Using list addition, and the set and sorted operations, compute the vocabulary of the sentences sent1 ... sent8.

◑ What is the difference between the following two lines? Which one will give a larger value? Will this be the case for other texts?

>>> sorted(set(w.lower() for w in text1)) >>> sorted(w.lower() for w in set(text1))

◑ What is the difference between the following two tests: w.isupper() and not w.islower()?

◑ Write the slice expression that extracts the last two words of text2.

◑ Find all the four-letter words in the Chat Corpus (text5). With the help of a frequency distribution (FreqDist), show these words in decreasing order of frequency.

◑ Review the discussion of looping with conditions in 13.4. Use a combination of for and if statements to loop over the words of the movie script for Monty Python and the Holy Grail (text6) and print all the uppercase words, one per line.

◑ Write expressions for finding all words in text6 that meet the conditions listed below. The result should be in the form of a list of words: ['word1', 'word2', ...].

- Ending in ize

- Containing the letter z

- Containing the sequence of letters pt

- Having all lowercase letters except for an initial capital (i.e., titlecase)

◑ Define sent to be the list of words ['she', 'sells', 'sea', 'shells', 'by', 'the', 'sea', 'shore']. Now write code to perform the following tasks:

- Print all words beginning with sh

- Print all words longer than four characters

◑ What does the following Python code do? sum(len(w) for w in text1) Can you use it to work out the average word length of a text?

◑ Define a function called vocab_size(text) that has a single parameter for the text, and which returns the vocabulary size of the text.

◑ Define a function percent(word, text) that calculates how often a given word occurs in a text, and expresses the result as a percentage.

◑ We have been using sets to store vocabularies. Try the following Python expression: set(sent3) < set(text1). Experiment with this using different arguments to set(). What does it do? Can you think of a practical application for this?

◑ Write code to sort words by length. Consult the Python documentation concerning the key parameter.

◑ Create a list of words and store it in a variable sent1. Now assign sent2 = sent1. Modify one of the items in sent1 and verify that sent2 has changed.

- Now try the same exercise but instead assign sent2 = sent1[:]. Modify sent1 again and see what happens to sent2. Explain.

- Now define text1 to be a list of lists of strings (e.g. to represent a text consisting of multiple sentences. Now assign text2 = text1[:], assign a new value to one of the words, e.g. text1[1][1] = 'Monty'. Check what this did to text2. Explain.

- Load Python's deepcopy() function (i.e. from copy import deepcopy), consult its documentation, and test that it makes a fresh copy of any object.

◑ Initialize an n-by-m list of lists of empty strings using list multiplication, e.g. word_table = [[''] * n] * m. What happens when you set one of its values, e.g. word_table[1][2] = "hello"? Explain why this happens. Now write an expression using range() to construct a list of lists, and show that it does not have this problem.

◑ Write code to initialize a two-dimensional array of sets called word_vowels and process a list of words, adding each word to word_vowels[l][v] where l is the length of the word and v is the number of vowels it contains.

◑ Write a function novel10(text) that prints any word that appeared in the last 10% of a text that had not been encountered earlier.

◑ Write a program that takes a sentence expressed as a single string, splits it and counts up the words. Get it to print out each word and the word's frequency, one per line, in alphabetical order.

◑ Write a function shorten(text, n) to process a text, omitting the n most frequently occurring words of the text. How readable is it?

◑ Write code to print out an index for a lexicon, allowing someone to look up words according to their meanings (or pronunciations; whatever properties are contained in lexical entries).

◑ Write a list comprehension that sorts a list of WordNet synsets for proximity to a given synset. For example, given the synsets minke_whale.n.01, orca.n.01, novel.n.01, and tortoise.n.01, sort them according to their shortest_path_distance() from right_whale.n.01.

◑ Write a function that takes a list of words (containing duplicates) and returns a list of words (with no duplicates) sorted by decreasing frequency. E.g. if the input list contained 10 instances of the word table and 9 instances of the word chair, then table would appear before chair in the output list.

◑ Write a function that takes a text and a vocabulary as its arguments and returns the set of words that appear in the text but not in the vocabulary. Both arguments can be represented as lists of strings. Can you do this in a single line, using set.difference()?

◑ Import the itemgetter() function from the operator module in Python's standard library (i.e. from operator import itemgetter). Create a list words containing several words. Now try calling: sorted(words, key=itemgetter(1)), and sorted(words, key=itemgetter(-1)). Explain what itemgetter() is doing.